Google DeepMind and World Labs unveil AI tools to create 3D spaces from simple prompts

Google DeepMind and startup World Labs this week both revealed previews of AI tools that can be used to create immersive 3D environments from simple prompts.

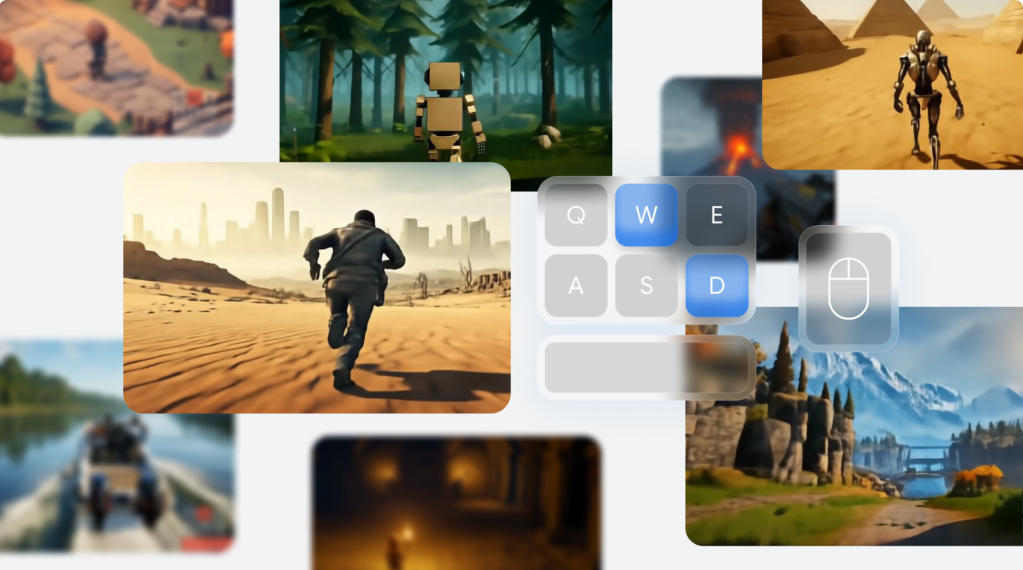

World Labs, the startup founded by AI pioneer Fei-Fei Li and backed by $230 million in funding, announced its 3D “world generation” model on Tuesday. It turns a static image into a computer game-like 3D scene that can be navigated using keyboard and mouse controls.

“Most GenAI tools make 2D content like images or videos,” World Labs said in a blog post. “Generating in 3D instead improves control and consistency. This will change how we make movies, games, simulators, and other digital manifestations of our physical world.”

One example is the Vincent van Gogh painting “Café Terrace at Night,” which the AI model used to generateadditional content to create a small area to view and move around in. Others are more like first-person computer games.

World Labs’ 3D “world generation” model turns a static image into a computer game-like 3D scene that can be navigated with keyboard and mouse controls.

World Labs

WorldLabs also demonstrated the ability to add effects to 3D scenes, and control virtual camera zoom, for instance. (You can try out the various scenes here.)

Creators that have tested the technology said it could help cut the time needed to build 3D environments, according to a video posted in the blog post, and help users brainstorm ideas much faster.

The 3D scene builder is a “first early preview” and is not available as a product yet.

Separately, Google’s DeepMind AI research division announced in a blog post Wednesday its Genie 2, a “foundational world model” that enables an “endless variety of action-controllable, playable 3D environments.”

It’s the successor to the first Genie model, unveiled earlier this year, which can generate 2D platformer-style computer games from text and image prompts. Genie 2 does the same for 3D games that can be navigated in first-person view or via an in-game avatar that can perform actions such as running and jumping.

It’s possible to generate “consistent worlds” for up to a minute, DeepMind said, with most of the examples showcased in the blog post lasting between 10 and 20 seconds. Genie 2 can also remember parts of the virtual world that are no longer in view, reproducing them accurately when they’re observable again.

DeepMind said its work on Genie is still at an early stage; it’s not clear when the technology might be more widely available. Genie 2 is described as a research tool that can “rapidly prototype diverse interactive experiences” and train AI agents.

Google also announced that its generative AI (genAI) video model, Veo, is now available in a private preview to business customers using its Vertex AI platform. The image-to-video model will open up “new possibilities for creative expression” and streamline “video production workflows,” Google said in a blog post Tuesday.

Amazon Web Services also announced its range of Nova AI models this week, including AI video generation capabilities; OpenAI is thought to be launching Sora, its text-to-video software, later this month.